import pandas as pd

import numpy as np

import wooldridge

import statsmodels.formula.api as smf

import matplotlib.pyplot as pltwooldridge.data() J.M. Wooldridge (2019) Introductory Econometrics: A Modern Approach,

Cengage Learning, 6th edition.

401k 401ksubs admnrev affairs airfare

alcohol apple approval athlet1 athlet2

attend audit barium beauty benefits

beveridge big9salary bwght bwght2 campus

card catholic cement census2000 ceosal1

ceosal2 charity consump corn countymurders

cps78_85 cps91 crime1 crime2 crime3

crime4 discrim driving earns econmath

elem94_95 engin expendshares ezanders ezunem

fair fertil1 fertil2 fertil3 fish

fringe gpa1 gpa2 gpa3 happiness

hprice1 hprice2 hprice3 hseinv htv

infmrt injury intdef intqrt inven

jtrain jtrain2 jtrain3 kielmc lawsch85

loanapp lowbrth mathpnl meap00_01 meap01

meap93 meapsingle minwage mlb1 mroz

murder nbasal nyse okun openness

pension phillips pntsprd prison prminwge

rdchem rdtelec recid rental return

saving sleep75 slp75_81 smoke traffic1

traffic2 twoyear volat vote1 vote2

voucher wage1 wage2 wagepan wageprc

wine

Examples¶

wage1 = wooldridge.data("wage1")

meap93 = wooldridge.data("meap93")

gpa1 = wooldridge.data("gpa1")

campus = wooldridge.data("campus")

hprice2 = wooldridge.data("hprice2")

k401 = wooldridge.data("401k")

jtrain = wooldridge.data("jtrain")

rdchem = wooldridge.data("rdchem")

bwght = wooldridge.data("bwght")4.1 Hourly Wage Equation¶

wooldridge.data("wage1", description=True)name of dataset: wage1

no of variables: 24

no of observations: 526

+----------+---------------------------------+

| variable | label |

+----------+---------------------------------+

| wage | average hourly earnings |

| educ | years of education |

| exper | years potential experience |

| tenure | years with current employer |

| nonwhite | =1 if nonwhite |

| female | =1 if female |

| married | =1 if married |

| numdep | number of dependents |

| smsa | =1 if live in SMSA |

| northcen | =1 if live in north central U.S |

| south | =1 if live in southern region |

| west | =1 if live in western region |

| construc | =1 if work in construc. indus. |

| ndurman | =1 if in nondur. manuf. indus. |

| trcommpu | =1 if in trans, commun, pub ut |

| trade | =1 if in wholesale or retail |

| services | =1 if in services indus. |

| profserv | =1 if in prof. serv. indus. |

| profocc | =1 if in profess. occupation |

| clerocc | =1 if in clerical occupation |

| servocc | =1 if in service occupation |

| lwage | log(wage) |

| expersq | exper^2 |

| tenursq | tenure^2 |

+----------+---------------------------------+

These are data from the 1976 Current Population Survey, collected by

Henry Farber when he and I were colleagues at MIT in 1988.

model01 = smf.ols("np.log(wage) ~ educ + exper + tenure", data=wage1).fit()

model01.summary2().tables[1].iloc[:, :2]We got exper has with a standard error of 0.0017

SO the t statistic is , t critical is 1.645 at 5% and 2.326 at 1%,

t statistic > t critical we reject the null hypothesis at 1% significance level

is greater than zero

4.2 Student Performance And School Size¶

wooldridge.data("meap93", description=True)name of dataset: meap93

no of variables: 17

no of observations: 408

+----------+---------------------------------+

| variable | label |

+----------+---------------------------------+

| lnchprg | perc of studs in sch lnch prog |

| enroll | school enrollment |

| staff | staff per 1000 students |

| expend | expend. per stud, $ |

| salary | avg. teacher salary, $ |

| benefits | avg. teacher benefits, $ |

| droprate | school dropout rate, perc |

| gradrate | school graduation rate, perc |

| math10 | perc studs passing MEAP math |

| sci11 | perc studs passing MEAP science |

| totcomp | salary + benefits |

| ltotcomp | log(totcomp) |

| lexpend | log of expend |

| lenroll | log(enroll) |

| lstaff | log(staff) |

| bensal | benefits/salary |

| lsalary | log(salary) |

+----------+---------------------------------+

I collected these data from the old Michigan Department of Education

web site. See MATHPNL.RAW for the current web site. I used data on

most high schools in the state of Michigan for 1993. I dropped some

high schools that had suspicious-looking data.

model02 = smf.ols("math10 ~ totcomp + staff + enroll", data=meap93).fit()

model02.summary2().tables[1].iloc[:, :2]It is believed that smaller schools are associated with higher test scores

We get similar to the conjecture

The critical t is at degree of freedoms

t statistic is we fail to reject

school size is not statistically significant

model022 = smf.ols("math10 ~ ltotcomp + lstaff + lenroll", data=meap93).fit()

model022.summary2().tables[1].iloc[:, :2]If we take level log form, we reject

If enrollment increases by 10%, math score is expected to decrease by 0.13 percentage points

model02.rsquared, model022.rsquared(np.float64(0.0540626230832697), np.float64(0.06537749365652679))level log model has higher so its better than level level model

4.3 Determinants of College GPA¶

wooldridge.data("gpa1", description=True)name of dataset: gpa1

no of variables: 29

no of observations: 141

+----------+--------------------------------+

| variable | label |

+----------+--------------------------------+

| age | in years |

| soph | =1 if sophomore |

| junior | =1 if junior |

| senior | =1 if senior |

| senior5 | =1 if fifth year senior |

| male | =1 if male |

| campus | =1 if live on campus |

| business | =1 if business major |

| engineer | =1 if engineering major |

| colGPA | MSU GPA |

| hsGPA | high school GPA |

| ACT | 'achievement' score |

| job19 | =1 if job <= 19 hours |

| job20 | =1 if job >= 20 hours |

| drive | =1 if drive to campus |

| bike | =1 if bicycle to campus |

| walk | =1 if walk to campus |

| voluntr | =1 if do volunteer work |

| PC | =1 of pers computer at sch |

| greek | =1 if fraternity or sorority |

| car | =1 if own car |

| siblings | =1 if have siblings |

| bgfriend | =1 if boy- or girlfriend |

| clubs | =1 if belong to MSU club |

| skipped | avg lectures missed per week |

| alcohol | avg # days per week drink alc. |

| gradMI | =1 if Michigan high school |

| fathcoll | =1 if father college grad |

| mothcoll | =1 if mother college grad |

+----------+--------------------------------+

Christopher Lemmon, a former MSU undergraduate, collected these data

from a survey he took of MSU students in Fall 1994.

model03 = smf.ols("colGPA ~ hsGPA + ACT + skipped", data=gpa1).fit()

model03.summary2().tables[1].iloc[:, :2]In two sided alternatives, 5% critical value is 1.96 at df = and 1% at 2.58

hsGPA has

ACT has small coefficient and is practically and statistically insignificant

skipped has statistically significant

4.4 Campus Crime and Enrollment¶

wooldridge.data("campus", description=True)name of dataset: campus

no of variables: 7

no of observations: 97

+----------+-----------------------+

| variable | label |

+----------+-----------------------+

| enroll | total enrollment |

| priv | =1 if private college |

| police | employed officers |

| crime | total campus crimes |

| lcrime | log(crime) |

| lenroll | log(enroll) |

| lpolice | log(police) |

+----------+-----------------------+

These data were collected by Daniel Martin, a former MSU

undergraduate, for a final project. They come from the FBI Uniform

Crime Reports and are for the year 1992.

model04 = smf.ols("lcrime ~ lenroll", data=campus).fit()

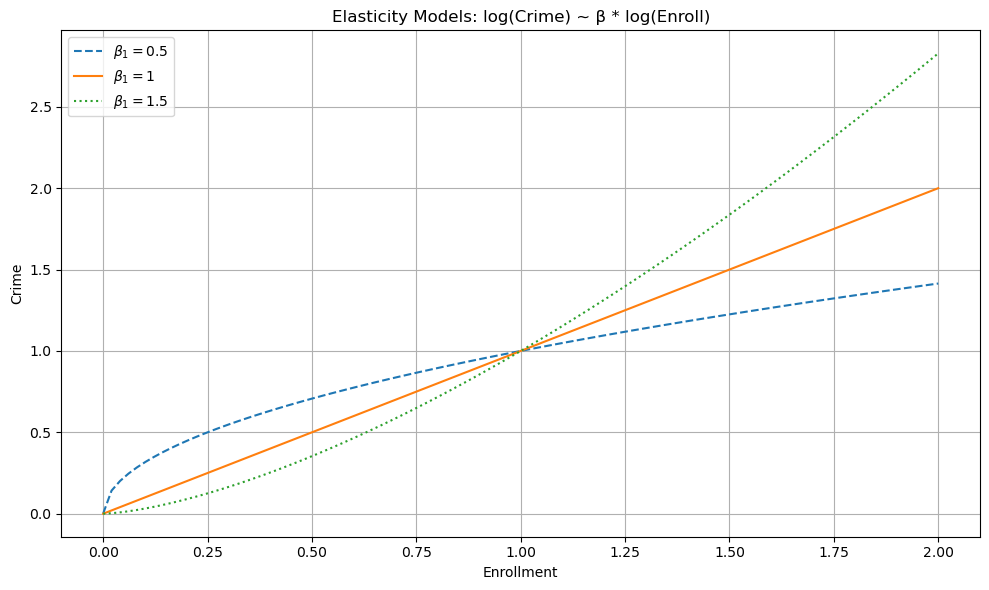

model04.summary2().tables[1].iloc[:, :2]We didn’t test for cuz its expected, is more interesting, if , then crime is a big problem on large campuses

enroll = np.linspace(0.000001, 2, 100)

crime_elastic_05 = np.exp(0.5 * np.log(enroll)) # β = 0.5

crime_elastic_1 = np.exp(1.0 * np.log(enroll)) # β = 1.0

crime_elastic_15 = np.exp(1.5 * np.log(enroll)) # β = 1.5

plt.figure(figsize=(10, 6))

plt.plot(enroll, crime_elastic_05, label=r"$\beta_1 = 0.5$", linestyle="--")

plt.plot(enroll, crime_elastic_1, label=r"$\beta_1 = 1$", linestyle="-")

plt.plot(enroll, crime_elastic_15, label=r"$\beta_1 = 1.5$", linestyle=":")

plt.xlabel("Enrollment")

plt.ylabel("Crime")

plt.title("Elasticity Models: log(Crime) ~ β * log(Enroll)")

plt.legend()

plt.grid(True)

plt.tight_layout()

plt.show()

model04.t_test("lenroll = 1").tvalue[0]array([2.45736997])4.5 Housing Prices and Air Pollution¶

wooldridge.data("hprice2", description=True)name of dataset: hprice2

no of variables: 12

no of observations: 506

+----------+-------------------------------+

| variable | label |

+----------+-------------------------------+

| price | median housing price, $ |

| crime | crimes committed per capita |

| nox | nit ox concen; parts per 100m |

| rooms | avg number of rooms |

| dist | wght dist to 5 employ centers |

| radial | access. index to rad. hghwys |

| proptax | property tax per $1000 |

| stratio | average student-teacher ratio |

| lowstat | perc of people 'lower status' |

| lprice | log(price) |

| lnox | log(nox) |

| lproptax | log(proptax) |

+----------+-------------------------------+

D. Harrison and D.L. Rubinfeld (1978), “Hedonic Housing Prices and the

Demand for Clean Air,” by Harrison, D. and D.L.Rubinfeld, Journal of

Environmental Economics and Management 5, 81-102. Diego Garcia, a

former Ph.D. student in economics at MIT, kindly provided these data,

which he obtained from the book Regression Diagnostics: Identifying

Influential Data and Sources of Collinearity, by D.A. Belsey, E. Kuh,

and R. Welsch, 1990. New York: Wiley.

model05 = smf.ols("lprice ~ lnox + np.log(dist) + rooms + stratio", data=hprice2).fit()

model05.summary2().tables[1].iloc[:, :2]t is so small, we fail to reject

model05.t_test("lnox = -1").tvalue[0]array([0.3979827])4.6 Participation Rates in 401(k) Plans¶

wooldridge.data("401k", description=True)name of dataset: 401k

no of variables: 8

no of observations: 1534

+----------+---------------------------------+

| variable | label |

+----------+---------------------------------+

| prate | participation rate, percent |

| mrate | 401k plan match rate |

| totpart | total 401k participants |

| totelg | total eligible for 401k plan |

| age | age of 401k plan |

| totemp | total number of firm employees |

| sole | = 1 if 401k is firm's sole plan |

| ltotemp | log of totemp |

+----------+---------------------------------+

L.E. Papke (1995), “Participation in and Contributions to 401(k)

Pension Plans:Evidence from Plan Data,” Journal of Human Resources 30,

311-325. Professor Papke kindly provided these data. She gathered them

from the Internal Revenue Service’s Form 5500 tapes.

model06 = smf.ols("prate ~ mrate + age + totemp", data=k401).fit()

model06.summary2().tables[1].iloc[:, :4]All variables are statistically significant, but totemp has very small coefficient making it practically insignificant

4.7 Effect of Job Training On Firm Scrap Rates¶

wooldridge.data("jtrain", description=True)name of dataset: jtrain

no of variables: 30

no of observations: 471

+----------+---------------------------------+

| variable | label |

+----------+---------------------------------+

| year | 1987, 1988, or 1989 |

| fcode | firm code number |

| employ | # employees at plant |

| sales | annual sales, $ |

| avgsal | average employee salary |

| scrap | scrap rate (per 100 items) |

| rework | rework rate (per 100 items) |

| tothrs | total hours training |

| union | =1 if unionized |

| grant | = 1 if received grant |

| d89 | = 1 if year = 1989 |

| d88 | = 1 if year = 1988 |

| totrain | total employees trained |

| hrsemp | tothrs/totrain |

| lscrap | log(scrap) |

| lemploy | log(employ) |

| lsales | log(sales) |

| lrework | log(rework) |

| lhrsemp | log(1 + hrsemp) |

| lscrap_1 | lagged lscrap; missing 1987 |

| grant_1 | lagged grant; assumed 0 in 1987 |

| clscrap | lscrap - lscrap_1; year > 1987 |

| cgrant | grant - grant_1 |

| clemploy | lemploy - lemploy[_n-1] |

| clsales | lavgsal - lavgsal[_n-1] |

| lavgsal | log(avgsal) |

| clavgsal | lavgsal - lavgsal[_n-1] |

| cgrant_1 | cgrant[_n-1] |

| chrsemp | hrsemp - hrsemp[_n-1] |

| clhrsemp | lhrsemp - lhrsemp[_n-1] |

+----------+---------------------------------+

H. Holzer, R. Block, M. Cheatham, and J. Knott (1993), “Are Training

Subsidies Effective? The Michigan Experience,” Industrial and Labor

Relations Review 46, 625-636. The authors kindly provided the data.

df = jtrain[(jtrain["year"] == 1987) & (jtrain["union"] == 0)]

model07 = smf.ols(

"np.log(scrap)~hrsemp + np.log(sales) + np.log(employ)", data=df

).fit()

model07.summary2().tables[1].iloc[:, :4]One more hour of training per employee [hrsemp] lowers scrap by 2.9%

t statistic is small, its not statistically significant

model07.nobs29.0len(df)126cols = ["scrap", "hrsemp", "sales", "employ"]

df[cols].dropna().shape[0]29Although the data has 126 rows, only 29 rows have no missing data in the rows of columns we use in the regression

from scipy import stats

coef = model07.params["hrsemp"]

se = model07.bse["hrsemp"]

t_stat = coef / se

# One-sided p-value (lower tail)

stats.t.cdf(t_stat, df=model07.df_resid)np.float64(0.10555164060312987)Due to the small sample size, we calculate p value for one sided test at 10% significance level and get 0.106, its almost rejected

4.8 Model of R&D Expenditures¶

wooldridge.data("rdchem", description=True)name of dataset: rdchem

no of variables: 8

no of observations: 32

+----------+-----------------------------+

| variable | label |

+----------+-----------------------------+

| rd | R&D spending, millions |

| sales | firm sales, millions |

| profits | profits, millions |

| rdintens | rd as percent of sales |

| profmarg | profits as percent of sales |

| salessq | sales^2 |

| lsales | log(sales) |

| lrd | log(rd) |

+----------+-----------------------------+

From Businessweek R&D Scoreboard, October 25, 1991.

model08 = smf.ols("lrd ~ lsales + profmarg", data=rdchem).fit()

model08.summary2().tables[1]Holding profit margin fixed, 1% increase in sales is associated with 1.084% increase in R&D spending

The 95% Confidence interval is [0.96 - 1.21] which doesn’t include 0 leading to rejection of

But it includes the unity 1, so 1% increase in sales is associated with 1% increase in R&D spending

The 95% Confidence interval for profit margin is [-0.005, 0.05] which includes the zero, we fail to reject at the 5% significance level using a two-sided test

However, using a one-sided alternative hypothesis , the one-sided p-value is 0.0504, making the coefficient statistically significant at the 5% level.

This suggests that a 1-unit increase in profit margin, holding sales constant, is associated with a 2.2% increase in R&D spending, on average.

coef = model08.params["profmarg"]

se = model08.bse["profmarg"]

t_stat = coef / se

# One-sided p-value (upper tail)

1 - stats.t.cdf(t_stat, df=model08.df_resid)np.float64(0.05047604323767163)4.9 Parents Education in a Birth Weight Equation¶

wooldridge.data("bwght", description=True)name of dataset: bwght

no of variables: 14

no of observations: 1388

+----------+--------------------------------+

| variable | label |

+----------+--------------------------------+

| faminc | 1988 family income, $1000s |

| cigtax | cig. tax in home state, 1988 |

| cigprice | cig. price in home state, 1988 |

| bwght | birth weight, ounces |

| fatheduc | father's yrs of educ |

| motheduc | mother's yrs of educ |

| parity | birth order of child |

| male | =1 if male child |

| white | =1 if white |

| cigs | cigs smked per day while preg |

| lbwght | log of bwght |

| bwghtlbs | birth weight, pounds |

| packs | packs smked per day while preg |

| lfaminc | log(faminc) |

+----------+--------------------------------+

J. Mullahy (1997), “Instrumental-Variable Estimation of Count Data

Models: Applications to Models of Cigarette Smoking Behavior,” Review

of Economics and Statistics 79, 596-593. Professor Mullahy kindly

provided the data. He obtained them from the 1988 National Health

Interview Survey.

df = bwght[["bwght", "cigs", "parity", "faminc", "motheduc", "fatheduc"]].dropna()

model09 = smf.ols("bwght ~ cigs + parity + faminc + motheduc + fatheduc", data=df).fit()

model09.summary2().tables[1]model09r = smf.ols("bwght ~ cigs + parity + faminc", data=df).fit()

model09r.summary2().tables[1]R2_ur = model09.rsquared

R2_r = model09r.rsquared

n = model09.nobs

k_ur = model09.df_model + 1

q = 2numerator = (R2_ur - R2_r) / q

denominator = (1 - R2_ur) / (n - k_ur)

F_stat = numerator / denominator

F_statnp.float64(1.437268638975128)To test the joint significance of parents’ education, we must ensure both the unrestricted and restricted models use the same observations.

Therefore, we first subset the data to exclude any rows with missing values in the unrestricted model. This ensures both models are estimated on the same sample, allowing a valid comparison.

Note: If you use statsmodels’s built-in .f_test() function after fitting the unrestricted model, it automatically uses the correct sample. So you do not need to drop missing values manually in that case.

model092 = smf.ols(

"bwght ~ cigs + parity + faminc + motheduc + fatheduc", data=bwght

).fit()

model092.f_test("motheduc = 0, fatheduc = 0")<class 'statsmodels.stats.contrast.ContrastResults'>

<F test: F=1.4372686389751854, p=0.23798962194786966, df_denom=1.18e+03, df_num=2>We fail to reject the null hypothesis. In other words, parents education is jointly insignificant

4.10 Salary-Pension Tradeoff for Teachers¶

if totcomp [total compensation] includes annual salary of teacher and benefits [like insurance]

then

which can be rewritten as

take the log to get

for small , we have the approximation

To understandard salary-benefits tradeoffs, get the econometric model

To test salary benefits tradeoff, test

The simple regression fails to reject the null, but it becomes significant after adding controls

df = meap93.copy()

df["bs_ratio"] = df["benefits"] / df["salary"]

model010 = smf.ols("lsalary ~ bs_ratio", data=df).fit()

model010.summary2().tables[1].iloc[:, :4]model010.t_test("bs_ratio = -1")<class 'statsmodels.stats.contrast.ContrastResults'>

Test for Constraints

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

c0 -0.8254 0.200 0.873 0.383 -1.218 -0.432

==============================================================================